IsaSAT Releases

SAT Competition 2024

There is not much to tell for the release for this year of the SAT Competition 2024. This year, I finished last, exactly as expected. However, I am very close to the second-to-last on SAT benchmarks.

My main performance problem seems to be UNSAT problems, which I blame on missing preprocessing. I intended to implement variable elimination based on the model reconstruction implemented in Katharina Wagner’s Master’s thesis in Isabelle. I actually started to implement it, but, as usual, once I went to proofs, things became more complicated. But it is interesting.

SAT solvers implicitly have two different sets of variables: the ones that can be used and the ones that appear in the clauses. All variables in clauses can be used… but the first set is one to be used to define the size of the various arrays. However, some variables might have been eliminated, making them still usable. Does not seem to be issue, but in my correctness proofs of CDCL, I merge these sets (and this is an invariant). In retrospect, it is obvious that it would be a problem… but I started that 9 years ago and it is the very first time that it is a roadblock.

The equality of the variable sets caused a minor issue: during subsumption, it can happen that a variable is removed. To avoid this issue, I simply kept the clauses but proved that I never have to use them for propagations/conflict (and the clauses is deleted from the concrete code, it is just kept for the proofs).

In my first attempt to add variable elimination, I thought I could just use the same trick. Except that it is annoying to keep the clauses and work on them. It is also not so obvious that all these clauses are not required to be considered for resolution during variable elimination, especially if some generated clauses are removed because of tautologies.

In my second attempt, I tried to just make tautologies out of subsumed clauses in order to ensure that we do need to consider those clauses for variable elimination because they are already tautologies. But that fails for unit clauses.

For my current attempt, let’s see if it works and if it fails again. Maybe I will be done by next year.

SAT Competition 2023

This year, once again, I submitted IsaSAT to the SAT Competition 2023 (the source code is as always available). According to the results, I did not finish last. However, compared to other solvers, I am trailing in the unsatisfiable instances. I believe that this is due to the fact that I still do not have bounded variable elimination. In the past, I assumed that vivification was also very important, but its importance is now less clear after Daniela and I attempted to examine it more precisely for POS'23 on the SAT solver CaDiCaL.

Something special happened this year: it turned out that my DRAT proofs are broken because the proof format is not strong enough to express what I want. I was aware of this problem before the competition (as it already existed last year).

My solver has two sets of clauses: the irredundant set N and the redundant set U, with the strict invariant N⊨U. To handle pure literals, I simply check for literals that appear only in one direction in N (all positive or all negative) and set the corresponding unit. For example, if N is A∨B, A∨¬B, B∨C, IsaSAT would first learn the unit clause A, delete all clauses containing A, and then learn the unit clauses B and C. This results in an empty problem.

This is legal (and is part of my proofs!). Technically, adding the units is a DRAT clause… but only if the negated literal does not appear in U. Given my strict invariants, I don’t care in IsaSAT. However, the DRAT checker has a different opinion on this because N and U cannot be distinguished (I have been told that VeriPB is able to do so, but did not look at it yet). Therefore, some of my proofs were rightfully rejected while IsaSAT did nothing incorrect and outputted the correct answer.

Other SAT solvers also face this issue, but they simply remove all the clauses containing a pure literal (both in N and U), making the transformation legal – but maybe a clause in U could have been useful for solving the problem.

Currently, there is no solution to this problem – execept that I only generate proofs for the SAT Competition, because it is mandatory in the main track, even though my SAT solver is entirely verified so the answer cannot be wrong.

Interestingly I found a heuristic bug after the submission, but it only helps on the SC2021 and SC2022 problems, not on the SC2023 problems. So this is a story for another time.

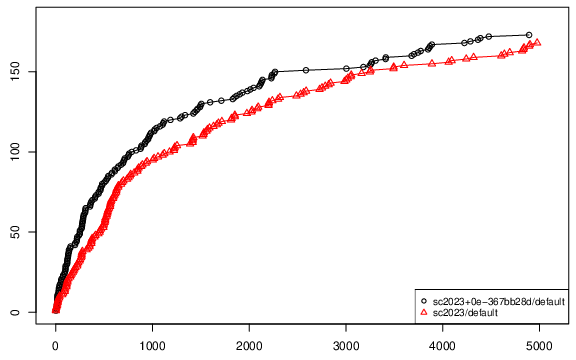

(sc2023 is basically the version submitted and the black one has the fix, see the PDF for a better version.)

SAT Competition 2022 and v2022-01

The second release is now available. It is the version submitted to the SAT Competition 2022. This year I managed to make IsaSAT work on StarExec.

Compared to the version form the EDA Challenge:

-

It can now produces DRAT proofs. There are absolutely no guarantees on the correctness of those proofs… And in particular, I have managed to produce wrong proofs when I forgot to log unit clauses. This does not matter because the entire solver is verified, but it is mandatory to take part in the Main track.

-

I have implemented pure literal deletion. IsaSAT search for literals that do not appear or appear in only one polarity and sets them to true. It is the first transformation performed by IsaSAT that does not preserve models.

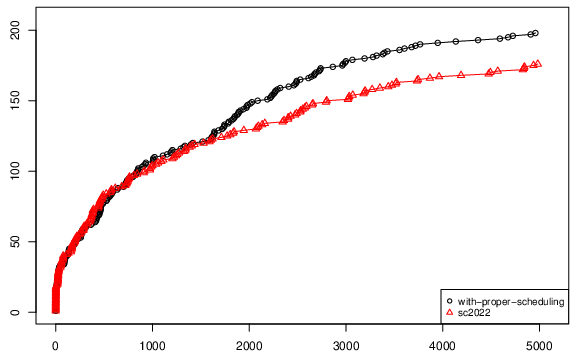

Shortly after the SAT Competition deadline, while drinking tea and looking at the HTML generated documentation, I found two heuristics bugs, which are solved in the release v2022-01. According to my benchmarks it solves 23 more problems on the benchmarks from the SAT Competition 2021:

| solved | sat | uns | par2 | |

|---|---|---|---|---|

| with-proper-scheduling | 198 | 87 | 111 | 2273168 |

| sc2022 | 176 | 85 | 91 | 2457296 |

(Better PDF version of the cactus plot). So, clearly a good fix. I will probably try to describe to the bug in more details, but in short: I was restarting way too little and reducing way too much. And I did not realise that because I swapped it when I printed it during the statistics.

EDA-Challenge 2021

The first official release is available. It is the version submitted to the EDA-Challenge 2021. To the surprise of everyone (including the author!), it actually won the challenge. The system description submitted to the EDA Challenge is available.

How good is the solver really? Another comparison is the well-known SAT Competition that features hard instances with long running time. The comparison is unfair towards my SAT solver (because you need inprocessing nowadays to even have a chance for most instances), but the SAT Competition is the standard way to compare solvers.

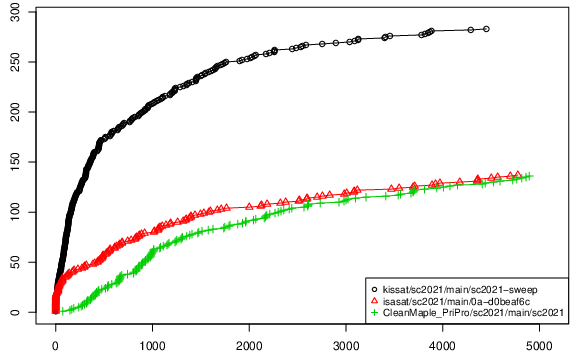

Here is a CDF with kissat (who finished second) and the solver who finished last during the SAT Competition 2021 (in the plot, higher is better!):

(Better PDF version). The conclusion is that IsaSAT would clearly not have won the SAT Competition 2021, but also would probably not have been the slowest solver (if I had been able to make IsaSAT work on Starexec). It is not a great result, but it is an indication how far you can go without inprocessing.

| Solved | ||||

|---|---|---|---|---|

| Solver | SAT+UNSAT | SAT | UNSAT | PAR-2 |

| Kissat | 283 | 137 | 146 | 1377523 |

| IsaSAT | 137 | 69 | 68 | 2795128 |

| CleanMaple_PriPro | 136 | 71 | 65 | 2868588 |

The conclusion is three-fold. First, it is possible to verify efficient code in a proof assistant. Second, there is no reason to believe that verified code is slower than hand-written one1 – even if it takes much more time to prove correctness. Third, the perfect SAT solver depends on the benchmarks you have.

-

I don’t believe that for verified code targetting Standard ML, however. Even if MLton is a very impressive compiler for Standard ML code. ↩︎